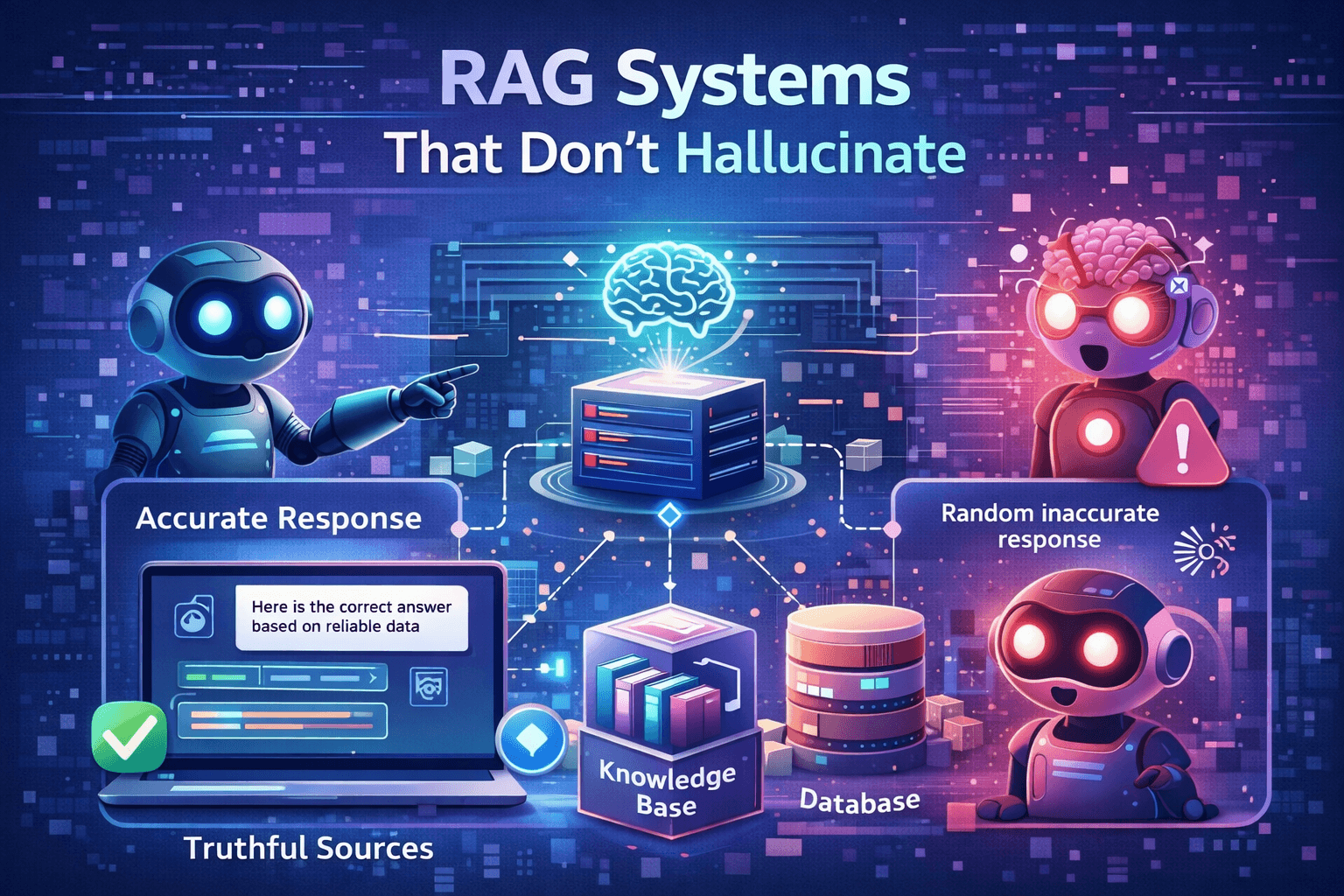

Retrieval-augmented generation (RAG) reduces hallucinations by grounding answers in trusted data. The catch: weak retrieval or poor prompting still lets errors through. Use the steps below to improve accuracy and confidence.

Why Hallucinations Happen

Hallucinations usually come from missing context, poor chunk quality, or unclear instructions. If the model can’t find the answer, it will guess unless you tell it to abstain.

Data Preparation

- Clean and de-duplicate source content

- Attach metadata like source, version, and owner

- Remove ambiguous or outdated documents

Chunking Strategy

Chunk size controls recall and precision. Start with 300–800 tokens and keep headings with their sections so the model sees context.

- Split by headings and semantic boundaries

- Include titles and section summaries

- Store source URLs and doc IDs with each chunk

Retrieval Quality

Use hybrid search (vector + keyword) for higher recall. Apply filters for product, region, or document type before ranking.

const results = await search({

query,

filters: { product: "docs", status: "published" },

topK: 8,

hybrid: true,

});Grounding and Citations

Require citations to keep answers anchored. Return the response plus the specific chunks used, and show them in the UI.

Reranking and Filters

Add a reranker to reorder results by relevance. Filter out low-confidence chunks and keep only the top citations.

Prompt Controls

Add explicit rules: answer only from context, cite sources, and say “I don’t know” when evidence is missing. A short system prompt with a JSON schema helps enforce this.

Evaluation and Monitoring

Use a golden set of Q&A to measure grounding. Track answer correctness, citation coverage, and abstain rates in production.

- Offline eval: exact match + semantic similarity

- Human review: weekly error buckets

- Runtime monitoring: latency, cost, and fallback rate

Production Checklist

- Hybrid retrieval and reranking enabled

- Citations required in the response schema

- Abstain rule for missing context

- Evaluation dashboard with weekly review

FAQs

Do I need a vector database? For most RAG systems, yes. It keeps retrieval fast and scalable.

How many chunks should I pass? Start with 4–8 and adjust using evaluation results.

Can I guarantee zero hallucinations? Not fully, but strong retrieval, citations, and abstain rules reduce risk significantly.

Need Help?

Need help building a production RAG pipeline? OurAI solutions team can help with data prep, evaluation, and deployment.